NVIDIA vGPU with Red Hat OpenStack Platform 14

Red Hat OpenStack Platform 14 is now generally available \o/

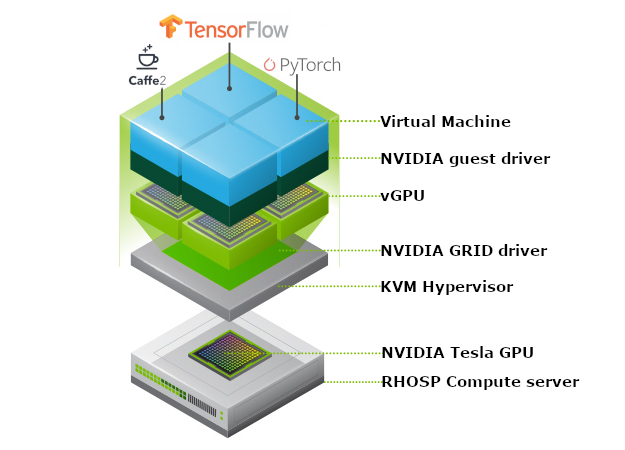

NVIDIA GRID capabilities are available as a technology preview to support NVIDIA Virtual GPU (vGPU). Multiple OpenStack instances virtual machines can have simultaneous, direct access to a single physical GPU. vGPU configuration is fully automated via Red Hat OpenStack Platform director.

- Description of the platform

- Install KVM on RHEL 7.6 server

- Director installation

- Prepare templates

- Virtual GPU License Server

- Launch the deployment

- Test a vGPU instance

Prerequisites:

This documentation is providing the information to use vGPU with Red Hat OpenStack Platform 14. We will not explain in detail how to deploy RHOSP but we will focus on specific NVIDIA Tesla vGPU configuration.

Description of the platform

For this test we will use two servers, the compute node has two GPU boards (M10 and M60). This lab environment uses heterogeneous GPU board types, for a production environment, I advise to use the same type of card like the Tesla V100 for data center or Tesla T4 for edge.

-

One Dell PowerEdge R730:

compute node with two GPU cards:

NVIDIA Tesla M10 with two physical GPUs per PCI board

NVIDIA Tesla M60 with four physical GPUs per PCI board -

One Dell PowerEdge R440:

qemu/kvm host for director and controller VMs

This server will host these two control VMs:- lab-director: director to manage the RHOSP deployment

- lab-controller: RHOSP controller

CUDA will only run in a full vGPU profile: 8Q for these M10 or M60 old Tesla boards. I encourage you to use V100 or T4 Tesla boards to be able to use all vGPU profiles.

The platform uses network isolation with VLAN on one NIC:

Install KVM on RHEL 7.6 server

Enable repositories:

[root@lab607 ~]# subscription-manager register --username myrhnaccount

[root@lab607 ~]# subscription-manager attach --pool=XXXXXXXXXXXXX

[root@lab607 ~]# subscription-manager repos --disable=*

[root@lab607 ~]# subscription-manager repos --enable=rhel-7-server-rpms --enable=rhel-7-server-openstack-14-rpms

Install OVS and KVM packages:

[root@lab607 ~]# yum install -y libguestfs libguestfs-tools openvswitch virt-install kvm libvirt libvirt-python python-virtinst libguestfs-xfs wget net-tools nmap

Enable libvirt:

[root@lab607 ~]# systemctl start libvirtd

[root@lab607 ~]# systemctl enable libvirtd

Setup br0 on the host virlab607:

[root@lab607 ~]# systemctl stop firewalld

[root@lab607 ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@lab607 ~]# yum install iptables-services

[root@lab607 ~]# systemctl start iptables

[root@lab607 ~]# systemctl enable iptables

Setup br0 on the host lab607:

[root@lab607 ~]# systemctl start openvswitch

[root@lab607 ~]# systemctl enable openvswitch

Created symlink from /etc/systemd/system/multi-user.target.wants/openvswitch.service to /usr/lib/systemd/system/openvswitch.service.

Prepare br0:

[root@lab607 ~]# cat << EOF > /etc/sysconfig/network-scripts/ifcfg-br0

DEVICE=br0

TYPE=OVSBridge

DEVICETYPE=ovs

ONBOOT=yes

NM_CONTROLLED=no

BOOTPROTO=none

IPADDR="192.168.168.7"

PREFIX="24"

EOF

[root@lab607 ~]# cat << EOF > /etc/sysconfig/network-scripts/ifcfg-em2

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=em2

UUID=c0da4182-e956-4c08-a9ab-9d11ccbfdaa6

DEVICE=em2

ONBOOT=no

DEVICETYPE=ovs

TYPE=OVSPort

OVS_BRIDGE=br0

EOF

Create libvirt bridge br0:

[root@lab607 ~]# cat br0.xml

<network>

<name>br0</name>

<forward mode='bridge'/>

<bridge name='br0'/>

<virtualport type='openvswitch'/>

</network>

[root@lab607 ~]# sudo virsh net-define br0.xml

Network br0 defined from br0.xml

[root@lab607 ~]# virsh net-start br0

Network br0 started

Director VM

Copy the link of the last RHL 7.6 KVM cloud image here (rhel-server-7.6-x86_64-kvm.qcow2): https://access.redhat.com/downloads/

[egallen@lab607 ~]$ sudo mkdir -p /data/inetsoft

[egallen@lab607 ~]$ cd /data/inetsoft

[egallen@lab607 inetsoft]$ curl -o rhel-server-7.6-x86_64-kvm.qcow2 "https://access.cdn.redhat.com/content/origin/files/sha256/XX/XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX/rhel-server-7.6-x86_64-kvm.qcow2?user=XXXXXXXXXXXXXXXXXXXXXXXXXXX&_auth_=XXXXXXXXXXXXXX_XXXXXXXXXXXXXXXXXXXXXXXX"

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 653M 100 653M 0 0 54.7M 0 0:00:11 0:00:11 --:--:-- 64.5M

[egallen@lab607 inetsoft]$ ls -lah rhel-server-7.6-x86_64-kvm.qcow2

-rw-rw-r--. 1 egallen egallen 654M Jan 16 04:45 rhel-server-7.6-x86_64-kvm.qcow2

Create the qcow2 file:

[egallen@lab607 ~]$ sudo qemu-img create -f qcow2 -o preallocation=metadata /var/lib/libvirt/images/lab-director.qcow2 120G;

Formatting '/var/lib/libvirt/images/lab-director.qcow2', fmt=qcow2 size=128849018880 cluster_size=65536 preallocation=metadata lazy_refcounts=off refcount_bits=16

[egallen@lab607 ~]$ sudo virt-resize --expand /dev/sda1 /data/inetsoft/rhel-server-7.6-x86_64-kvm.qcow2 /var/lib/libvirt/images/lab-director.qcow2

[ 0.0] Examining /data/inetsoft/rhel-server-7.6-x86_64-kvm.qcow2

◓ 25% ⟦▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒════════════════════════════════════════════════════════════════════════════════════════════════════════════⟧ --:--

100% ⟦▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒⟧ --:--

**********

Summary of changes:

/dev/sda1: This partition will be resized from 7.8G to 120.0G. The

filesystem xfs on /dev/sda1 will be expanded using the 'xfs_growfs'

method.

**********

[ 18.2] Setting up initial partition table on /var/lib/libvirt/images/lab-director.qcow2

[ 18.3] Copying /dev/sda1

100% ⟦▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒⟧ 00:00

[ 43.7] Expanding /dev/sda1 using the 'xfs_growfs' method

Resize operation completed with no errors. Before deleting the old disk,

carefully check that the resized disk boots and works correctly.

[egallen@lab607 ~]$ sudo virt-customize -a /var/lib/libvirt/images/lab-director.qcow2 --uninstall cloud-init --root-password password:XXXXXXXXX

[ 0.0] Examining the guest ...

[ 1.9] Setting a random seed

[ 1.9] Setting the machine ID in /etc/machine-id

[ 1.9] Uninstalling packages: cloud-init

[ 3.2] Setting passwords

[ 4.4] Finishing off

[root@lab607 ~]# sudo virt-install --ram 32768 --vcpus 8 --os-variant rhel7 \

--disk path=/var/lib/libvirt/images/lab-director.qcow2,device=disk,bus=virtio,format=qcow2 \

--graphics vnc,listen=0.0.0.0 --noautoconsole \

--network network:default \

--network network:br0 \

--name lab-director --dry-run \

--print-xml > /tmp/lab-director.xml;

[root@lab607 ~]# sudo virsh define --file /tmp/lab-director.xml

Start the VM and Get the IP from dnsmasq DHCP:

[egallen@lab607 ~]$ sudo virsh start lab-director

Domain lab-director started

[egallen@lab607 ~]$ sudo cat /var/log/messages | grep dnsmasq-dhcp

Jan 16 04:56:50 lab607 dnsmasq-dhcp[2242]: DHCPDISCOVER(virbr0) 52:54:00:5e:9c:32

Jan 16 04:56:50 lab607 dnsmasq-dhcp[2242]: DHCPOFFER(virbr0) 192.168.122.103 52:54:00:5e:9c:32

Jan 16 04:56:50 lab607 dnsmasq-dhcp[2242]: DHCPREQUEST(virbr0) 192.168.122.103 52:54:00:5e:9c:32

Jan 16 04:56:50 lab607 dnsmasq-dhcp[2242]: DHCPACK(virbr0) 192.168.122.103 52:54:00:5e:9c:32

Log into the VM:

[egallen@lab607 ~]$ ssh root@192.168.122.103

The authenticity of host '192.168.122.103 (192.168.122.103)' can't be established.

ECDSA key fingerprint is SHA256:yf9AxYVo2d5FwuLeAEswbWK83IYVYDcvTbJdtxEcv5A.

ECDSA key fingerprint is MD5:86:77:4e:e0:85:89:5c:03:82:ce:59:e0:a4:dd:92:4d.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.122.103' (ECDSA) to the list of known hosts.

root@192.168.122.103's password:

[root@localhost ~]#

Check RHEL release:

[root@lab-director ~]# cat /etc/redhat-release

Red Hat Enterprise Linux Server release 7.6 (Maipo)

Set hostname:

[root@localhost ~]# hostnamectl set-hostname lab-director.lan.redhat.com

[root@localhost ~]# exit

[egallen@lab607 ~]$ ssh root@192.168.122.103

root@192.168.122.245's password:

Last login: Wed Jan 16 05:02:35 2019 from gateway

[root@lab-director ~]#

Add stack user:

[root@lab-director ~]# adduser stack

[root@lab-director ~]# passwd stack

Changing password for user stack.

New password:

Retype new password:

passwd: all authentication tokens updated successfully.

[root@lab-director ~]# echo "stack ALL=(root) NOPASSWD:ALL" | tee -a /etc/sudoers.d/stack

stack ALL=(root) NOPASSWD:ALL

[root@lab-director ~]# chmod 0440 /etc/sudoers.d/stack

Copy SSH key:

[egallen@lab607 ~]$ ssh-copy-id stack@192.168.122.103

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

stack@192.168.122.103's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'stack@192.168.122.103'"

and check to make sure that only the key(s) you wanted were added.

[egallen@lab607 ~]$ ssh stack@192.168.122.103

[stack@lab-director ~]$

Set up network configuration:

[stack@lab-director ~]$ sudo tee /etc/sysconfig/network-scripts/ifcfg-eth0 << EOF

DEVICE="eth0"

BOOTPROTO="none"

ONBOOT="yes"

TYPE="Ethernet"

USERCTL="yes"

PEERDNS="yes"

IPV6INIT="yes"

PERSISTENT_DHCLIENT="1"

IPADDR=192.168.122.10

PREFIX=24

GATEWAY=192.168.122.1

DNS1=192.168.122.1

EOF

[stack@lab-director ~]$ sudo reboot

[egallen@lab607 ~]$ ssh stack@lab-director

The authenticity of host 'lab-director (192.168.122.10)' can't be established.

ECDSA key fingerprint is SHA256:yf9AxYVo2d5FwuLeAEswbWK83IYVYDcvTbJdtxEcv5A.

ECDSA key fingerprint is MD5:86:77:4e:e0:85:89:5c:03:82:ce:59:e0:a4:dd:92:4d.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'lab-director,192.168.122.10' (ECDSA) to the list of known hosts.

Last login: Wed Jan 16 05:15:40 2019 from gateway

[stack@lab-director ~]$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:5e:9c:32 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.10/24 brd 192.168.122.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe5e:9c32/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:54:84:08 brd ff:ff:ff:ff:ff:ff

Set up /etc/hosts:

[stack@lab-director ~]$ sudo tee /etc/hosts << EOF

127.0.0.1 lab-director localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

EOF

Enable RHOSP14 repositories:

[stack@lab-director ~]$ sudo subscription-manager register --username myrhnaccount

Registering to: subscription.rhsm.redhat.com:443/subscription

Password:

The system has been registered with ID: XXXXX-XXXX-XXXX-XXXXXXXX

The registered system name is: lab-director.lan.redhat.com

WARNING

The yum/dnf plugins: /etc/yum/pluginconf.d/subscription-manager.conf, /etc/yum/pluginconf.d/product-id.conf were automatically enabled for the benefit of Red Hat Subscription Management. If not desired, use "subscription-manager config --rhsm.auto_enable_yum_plugins=0" to block this behavior.

List available pools:

[stack@lab-director ~]$ sudo subscription-manager list --available

Grab the “Pool ID” that provides: “Red Hat OpenStack” and attach the pool ID:

[stack@lab-director ~]$ sudo subscription-manager attach --pool=XXXXXXXXXXXXXXXXXXXXXXXX

Successfully attached a subscription for: SKU

Disable all repositories:

[stack@lab-director ~]$ sudo subscription-manager repos --disable=*

...

Enable RHEL7 and RHOSP14 repositories only:

[stack@lab-director ~]$ sudo subscription-manager repos --enable=rhel-7-server-rpms --enable=rhel-7-server-openstack-14-rpms --enable=rhel-7-server-extras-rpms --enable=rhel-7-server-rh-common-rpms --enable=rhel-ha-for-rhel-7-server-rpms

Repository 'rhel-7-server-rpms' is enabled for this system.

Repository 'rhel-ha-for-rhel-7-server-rpms' is enabled for this system.

Repository 'rhel-7-server-extras-rpms' is enabled for this system.

Repository 'rhel-7-server-openstack-14-rpms' is enabled for this system.

Repository 'rhel-7-server-rh-common-rpms' is enabled for this system.

Upgrade:

[stack@lab-director ~]$ sudo yum upgrade -y

…

Complete!

Make a snapshot on the KVM host of this fresh RHEL 7.6 VM:

[stack@lab-director ~]$ sudo systemctl poweroff

Connection to lab-director closed by remote host.

Connection to lab-director closed.

[egallen@lab607 ~]$ sudo virsh snapshot-list lab-director

[egallen@lab607 ~]$ sudo virsh snapshot-create-as lab-director 20190116-1-rhel76

Domain snapshot 20190116-1-rhel76 created

[egallen@lab607 ~]$ sudo virsh snapshot-list lab-director

Name Creation Time State

------------------------------------------------------------

20190116-1-rhel76 2019-01-16 05:42:35 -0500 shutoff

Install director binaries:

[stack@lab-director ~]$ time sudo yum install -y python-tripleoclient

Loaded plugins: product-id, search-disabled-repos, subscription-manager

…

Complete!

real 4m47.428s

user 1m41.595s

sys 0m26.743s

Prepare director configuration:

[stack@lab-director ~]$ cat << EOF > /home/stack/undercloud.conf

[DEFAULT]

undercloud_hostname = lab-director.lan.redhat.com

local_ip = 172.16.16.10/24

undercloud_public_host = 172.16.16.11

undercloud_admin_host = 172.16.16.12

local_interface = eth1

undercloud_nameservers = 10.19.43.29

undercloud_ntp_servers = clock.redhat.com

overcloud_domain_name = lan.redhat.com

container_images_file=/home/stack/containers-prepare-parameter.yaml

docker_insecure_registries=172.16.16.12:8787,172.16.16.10:8787,docker-registry.engineering.redhat.com,brew-pulp-docker01.web.prod.ext.phx2.redhat.com:8888

[ctlplane-subnet]

cidr = 172.16.16.0/24

dhcp_start = 172.16.16.201

dhcp_end = 172.16.16.220

inspection_iprange = 172.16.16.221,172.16.16.230

gateway = 172.16.16.10

masquerade_network = True

subnets = ctlplane-subnet

local_subnet = ctlplane-subnet

masquerade = true

EOF

Generate default container configuration:

[stack@lab-director ~]$ time openstack tripleo container image prepare default --local-push-destination --output-env-file ~/containers-prepare-parameter.yaml

real 0m1.115s

user 0m0.714s

sys 0m0.251s

Check container configuration:

[stack@lab-director ~]$ cat ~/containers-prepare-parameter.yaml

# Generated with the following on 2019-01-16T10:41:41.881679

#

# openstack tripleo container image prepare default --local-push-destination --output-env-file /home/stack/containers-prepare-parameter.yaml

#

parameter_defaults:

ContainerImagePrepare:

- push_destination: true

set:

ceph_image: rhceph-3-rhel7

ceph_namespace: registry.access.redhat.com/rhceph

ceph_tag: latest

name_prefix: openstack-

name_suffix: ''

namespace: registry.access.redhat.com/rhosp14

neutron_driver: null

openshift_asb_namespace: registry.access.redhat.com/openshift3

openshift_asb_tag: v3.11

openshift_cluster_monitoring_image: ose-cluster-monitoring-operator

openshift_cluster_monitoring_namespace: registry.access.redhat.com/openshift3

openshift_cluster_monitoring_tag: v3.11

openshift_cockpit_image: registry-console

openshift_cockpit_namespace: registry.access.redhat.com/openshift3

openshift_cockpit_tag: v3.11

openshift_configmap_reload_image: ose-configmap-reloader

openshift_configmap_reload_namespace: registry.access.redhat.com/openshift3

openshift_configmap_reload_tag: v3.11

openshift_etcd_image: etcd

openshift_etcd_namespace: registry.access.redhat.com/rhel7

openshift_etcd_tag: latest

openshift_gluster_block_image: rhgs-gluster-block-prov-rhel7

openshift_gluster_image: rhgs-server-rhel7

openshift_gluster_namespace: registry.access.redhat.com/rhgs3

openshift_gluster_tag: latest

openshift_grafana_namespace: registry.access.redhat.com/openshift3

openshift_grafana_tag: v3.11

openshift_heketi_image: rhgs-volmanager-rhel7

openshift_heketi_namespace: registry.access.redhat.com/rhgs3

openshift_heketi_tag: latest

openshift_kube_rbac_proxy_image: ose-kube-rbac-proxy

openshift_kube_rbac_proxy_namespace: registry.access.redhat.com/openshift3

openshift_kube_rbac_proxy_tag: v3.11

openshift_kube_state_metrics_image: ose-kube-state-metrics

openshift_kube_state_metrics_namespace: registry.access.redhat.com/openshift3

openshift_kube_state_metrics_tag: v3.11

openshift_namespace: registry.access.redhat.com/openshift3

openshift_oauth_proxy_tag: v3.11

openshift_prefix: ose

openshift_prometheus_alertmanager_tag: v3.11

openshift_prometheus_config_reload_image: ose-prometheus-config-reloader

openshift_prometheus_config_reload_namespace: registry.access.redhat.com/openshift3

openshift_prometheus_config_reload_tag: v3.11

openshift_prometheus_node_exporter_tag: v3.11

openshift_prometheus_operator_image: ose-prometheus-operator

openshift_prometheus_operator_namespace: registry.access.redhat.com/openshift3

openshift_prometheus_operator_tag: v3.11

openshift_prometheus_tag: v3.11

openshift_tag: v3.11

tag: latest

tag_from_label: '{version}-{release}'

Install director:

[stack@lab-director ~]$ time openstack undercloud install

…

########################################################

Deployment successfull!

########################################################

Writing the stack virtual update mark file /var/lib/tripleo-heat-installer/update_mark_undercloud

##########################################################

The Undercloud has been successfully installed.

Useful files:

Password file is at ~/undercloud-passwords.conf

The stackrc file is at ~/stackrc

Use these files to interact with OpenStack services, and

ensure they are secured.

##########################################################

real 30m53.087s

user 10m50.567s

sys 1m30.979s

Test Keystone:

(undercloud) [stack@lab-director ~]$ source stackrc

(undercloud) [stack@lab-director ~]$ openstack token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2019-01-17T00:04:18+0000 |

| id | XXXXXXXXXXXXXXXXXXXXXXXXXXXX |

| project_id | 8eaa8db43a6743c5851cc5219056dd80 |

| user_id | f9a61486cc59444481e401b4851ac3d3 |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

Set up networks

List overcloud networks:

(undercloud) [stack@lab-director ~]$ openstack subnet list

+--------------------------------------+-----------------+--------------------------------------+----------------+

| ID | Name | Network | Subnet |

+--------------------------------------+-----------------+--------------------------------------+----------------+

| e0eb61d1-932c-4fb2-bd89-86c0d43adbdb | ctlplane-subnet | 8088e8ba-21ad-4b0f-98ec-83f24cc3ca39 | 172.16.16.0/24 |

+--------------------------------------+-----------------+--------------------------------------+----------------+

List overcloud subnets:

(undercloud) [stack@lab-director ~]$ openstack subnet show ctlplane-subnet

+-------------------+----------------------------------------------------------+

| Field | Value |

+-------------------+----------------------------------------------------------+

| allocation_pools | 172.16.16.201-172.16.16.220 |

| cidr | 172.16.16.0/24 |

| created_at | 2019-01-16T17:20:41Z |

| description | |

| dns_nameservers | 10.19.43.29 |

| enable_dhcp | True |

| gateway_ip | 172.16.16.10 |

| host_routes | destination='169.254.169.254/32', gateway='172.16.16.12' |

| id | e0eb61d1-932c-4fb2-bd89-86c0d43adbdb |

| ip_version | 4 |

| ipv6_address_mode | None |

| ipv6_ra_mode | None |

| name | ctlplane-subnet |

| network_id | 8088e8ba-21ad-4b0f-98ec-83f24cc3ca39 |

| project_id | 8eaa8db43a6743c5851cc5219056dd80 |

| revision_number | 0 |

| segment_id | None |

| service_types | |

| subnetpool_id | None |

| tags | |

| updated_at | 2019-01-16T17:20:41Z |

+-------------------+----------------------------------------------------------+

Prepare overcloud default image for the controller

Import images into glance:

[stack@lab-director ~]$ sudo yum install -y rhosp-director-images rhosp-director-images-ipa libguestfs-tools -y

[stack@lab-director ~]$ sudo mkdir -p /var/images/x86_64

[stack@lab-director ~]$ sudo chown stack:stack /var/images/x86_64

[stack@lab-director ~]$ cd /var/images/x86_64

(undercloud) [stack@lab-director x86_64]$ for i in /usr/share/rhosp-director-images/overcloud-full-latest-14.0.tar /usr/share/rhosp-director-images/ironic-python-agent-latest-14.0.tar; do tar -xvf $i; done

Customize and upload default overcloud image that will be used for the controller only:

[stack@lab-director x86_64]$ virt-customize -a overcloud-full.qcow2 --root-password password:XXXXXX

[ 0.0] Examining the guest ...

[ 40.3] Setting a random seed

[ 40.5] Setting the machine ID in /etc/machine-id

[ 40.6] Setting passwords

[ 59.1] Finishing off

[stack@lab-director ~]$ source ~/stackrc

(undercloud) [stack@lab-director ~]$ openstack overcloud image upload --update-existing --image-path /var/images/x86_64

Image "overcloud-full-vmlinuz" was uploaded.

+--------------------------------------+------------------------+-------------+---------+--------+

| ID | Name | Disk Format | Size | Status |

+--------------------------------------+------------------------+-------------+---------+--------+

| 216aea4a-912a-42a2-9cab-441d289f4fb6 | overcloud-full-vmlinuz | aki | 6639920 | active |

+--------------------------------------+------------------------+-------------+---------+--------+

Image "overcloud-full-initrd" was uploaded.

+--------------------------------------+-----------------------+-------------+----------+--------+

| ID | Name | Disk Format | Size | Status |

+--------------------------------------+-----------------------+-------------+----------+--------+

| d16fce37-fab6-4eb7-b19a-4b3f572e0214 | overcloud-full-initrd | ari | 60133074 | active |

+--------------------------------------+-----------------------+-------------+----------+--------+

Image "overcloud-full" was uploaded.

+--------------------------------------+----------------+-------------+------------+--------+

| ID | Name | Disk Format | Size | Status |

+--------------------------------------+----------------+-------------+------------+--------+

| ee868592-8a69-4636-9c02-92cd56805de0 | overcloud-full | qcow2 | 1031274496 | active |

+--------------------------------------+----------------+-------------+------------+--------+

Image "bm-deploy-kernel" was uploaded.

+--------------------------------------+------------------+-------------+---------+--------+

| ID | Name | Disk Format | Size | Status |

+--------------------------------------+------------------+-------------+---------+--------+

| f1b189e3-d3a0-4b33-acad-81f01ade39f4 | bm-deploy-kernel | aki | 6639920 | active |

+--------------------------------------+------------------+-------------+---------+--------+

Image "bm-deploy-ramdisk" was uploaded.

+--------------------------------------+-------------------+-------------+-----------+--------+

| ID | Name | Disk Format | Size | Status |

+--------------------------------------+-------------------+-------------+-----------+--------+

| f8c47730-5fa7-459e-b3ec-95c4d2c792ae | bm-deploy-ramdisk | ari | 420645365 | active |

+--------------------------------------+-------------------+-------------+-----------+--------+

Check image uploaded:

(undercloud) [stack@lab-director ~]$ openstack image list

+--------------------------------------+------------------------+--------+

| ID | Name | Status |

+--------------------------------------+------------------------+--------+

| f1b189e3-d3a0-4b33-acad-81f01ade39f4 | bm-deploy-kernel | active |

| f8c47730-5fa7-459e-b3ec-95c4d2c792ae | bm-deploy-ramdisk | active |

| ee868592-8a69-4636-9c02-92cd56805de0 | overcloud-full | active |

| d16fce37-fab6-4eb7-b19a-4b3f572e0214 | overcloud-full-initrd | active |

| 216aea4a-912a-42a2-9cab-441d289f4fb6 | overcloud-full-vmlinuz | active |

+--------------------------------------+------------------------+--------+

Prepare overcloud GPU image for compute nodes

Install iso tooling:

[stack@lab-director x86_64]$ sudo yum install genisoimage -y

[stack@lab-director x86_64]$ mkdir nvidia-package

Prepare an iso file with the NVIDIA GRID RPM downloaded on nvidia web site:

[stack@lab-director nvidia-package]$ ls

NVIDIA-vGPU-rhel-7.6-410.91.x86_64.rpm

[root@lab607 guest]# genisoimage -o nvidia-package.iso -R -J -V NVIDIA-PACKAGE nvidia-package/

[stack@lab-director x86_64]$ genisoimage -o nvidia-package.iso -R -J -V NVIDIA-PACKAGE nvidia-package/

I: -input-charset not specified, using utf-8 (detected in locale settings)

67.01% done, estimate finish Wed Jan 16 16:57:14 2019

Total translation table size: 0

Total rockridge attributes bytes: 279

Total directory bytes: 0

Path table size(bytes): 10

Max brk space used 0

7472 extents written (14 MB)

Prepare a customization script for the GPU image:

[stack@lab-director x86_64]$ cat << EOF > install-vgpu-grid-drivers.sh

#/bin/bash

# Remove nouveau driver

if grep -Fxq "modprobe.blacklist=nouveau" /etc/default/grub

then

# Nouveau already removed

echo "Nothing to do for Nouveau driver"

echo ">>>> /etc/default/grub:"

cat /etc/default/grub | grep nouveau

else

# To fix https://bugzilla.redhat.com/show_bug.cgi?id=1646871

sed -i 's/crashkernel=auto\"\ /crashkernel=auto\ /' /etc/default/grub

sed -i 's/net\.ifnames=0$/net.ifnames=0\"/' /etc/default/grub

# Nouveau driver not removed

sed -i 's/GRUB_CMDLINE_LINUX="[^"]*/& modprobe.blacklist=nouveau/' /etc/default/grub

echo ">>>> /etc/default/grub:"

cat /etc/default/grub | grep nouveau

grub2-mkconfig -o /boot/grub2/grub.cfg

fi

# NVIDIA GRID package

mkdir /tmp/mount

mount LABEL=NVIDIA-PACKAGE /tmp/mount

rpm -ivh /tmp/mount/NVIDIA-vGPU-rhel-7.6-410.91.x86_64.rpm

EOF

Customize GPU image:

[stack@lab-director x86_64]$ cp overcloud-full.qcow2 overcloud-gpu.qcow2

[stack@lab-director x86_64]$ virt-customize --attach nvidia-package.iso -a overcloud-gpu.qcow2 -v --run install-vgpu-grid-drivers.sh

[ 0.0] Examining the guest ...

libguestfs: creating COW overlay to protect original drive content

libguestfs: command: run: qemu-img

libguestfs: command: run: \ info

libguestfs: command: run: \ --output json

libguestfs: command: run: \ ./nvidia-package.iso

libguestfs: parse_json: qemu-img info JSON output:\n{\n "virtual-size": 15302656,\n "filename": "./nvidia-package.iso",\n "format": "raw",\n "actual-size": 15302656,\n

Relabel with SELinux

[stack@lab-director x86_64]$ time virt-customize -a overcloud-gpu.qcow2 --selinux-relabel

[ 0.0] Examining the guest ...

[ 25.3] Setting a random seed

[ 25.5] SELinux relabelling

[ 387.5] Finishing off

real 6m28.262s

user 0m0.086s

sys 0m0.059s

Prepare vmlinuz and initrd files for glance:

[stack@lab-director x86_64]$ sudo mkdir /tmp/initrd

[stack@lab-director x86_64]$ guestmount -a overcloud-gpu.qcow2 -i --ro /tmp/initrd

[stack@lab-director x86_64]$ df -T | grep fuse

/dev/fuse fuse 3524864 2207924 1316940 63% /tmp/initrd

[stack@lab-director x86_64]$ ls -la /tmp/initrd/boot

total 138476

dr-xr-xr-x. 4 root root 4096 Jan 16 17:21 .

drwxr-xr-x. 17 root root 224 Jan 3 07:21 ..

-rw-r--r--. 1 root root 151922 Nov 15 12:41 config-3.10.0-957.1.3.el7.x86_64

drwxr-xr-x. 3 root root 17 Jan 3 06:18 efi

drwx------. 5 root root 97 Jan 16 17:05 grub2

-rw-------. 1 root root 53704670 Jan 3 06:25 initramfs-0-rescue-0bc51786d56a4645b23dcd0510229358.img

-rw-------. 1 root root 70778142 Jan 16 17:20 initramfs-3.10.0-957.1.3.el7.x86_64.img

-rw-r--r--. 1 root root 314072 Nov 15 12:41 symvers-3.10.0-957.1.3.el7.x86_64.gz

-rw-------. 1 root root 3544010 Nov 15 12:41 System.map-3.10.0-957.1.3.el7.x86_64

-rwxr-xr-x. 1 root root 6639920 Jan 3 06:25 vmlinuz-0-rescue-0bc51786d56a4645b23dcd0510229358

-rwxr-xr-x. 1 root root 6639920 Nov 15 12:41 vmlinuz-3.10.0-957.1.3.el7.x86_64

-rw-r--r--. 1 root root 170 Nov 15 12:41 .vmlinuz-3.10.0-957.1.3.el7.x86_64.hmac

[stack@lab-director x86_64]$ cp /tmp/initrd/boot/vmlinuz*x86_64 ./overcloud-gpu.vmlinuz

[stack@lab-director x86_64]$ cp /tmp/initrd/boot/initramfs-3*x86_64.img ./overcloud-gpu.initrd

[stack@lab-director x86_64]$ ls -lah overcloud-gpu*

-rw-------. 1 stack stack 68M Jan 17 02:52 overcloud-gpu.initrd

-rw-r--r--. 1 qemu qemu 1.5G Jan 16 17:49 overcloud-gpu.qcow2

-rwxr-xr-x. 1 stack stack 6.4M Jan 17 02:52 overcloud-gpu.vmlinuz

Upload GPU image into director glance service:

[stack@lab-director x86_64]$ source ~/stackrc

(undercloud) [stack@lab-director x86_64]$ openstack overcloud image upload --update-existing --os-image-name overcloud-gpu.qcow2

Image "overcloud-gpu-vmlinuz" was uploaded.

+--------------------------------------+-----------------------+-------------+---------+--------+

| ID | Name | Disk Format | Size | Status |

+--------------------------------------+-----------------------+-------------+---------+--------+

| 89db0719-ef1e-41a0-b9f7-6ff0460ec2ef | overcloud-gpu-vmlinuz | aki | 6639920 | active |

+--------------------------------------+-----------------------+-------------+---------+--------+

Image "overcloud-gpu-initrd" was uploaded.

+--------------------------------------+----------------------+-------------+----------+--------+

| ID | Name | Disk Format | Size | Status |

+--------------------------------------+----------------------+-------------+----------+--------+

| 4307ee93-bf2e-4c9e-85e6-c9679401268c | overcloud-gpu-initrd | ari | 70778142 | active |

+--------------------------------------+----------------------+-------------+----------+--------+

Image "overcloud-gpu" was uploaded.

+--------------------------------------+---------------+-------------+------------+--------+

| ID | Name | Disk Format | Size | Status |

+--------------------------------------+---------------+-------------+------------+--------+

| f23cb412-c6d3-4301-b84b-9188fc2507c2 | overcloud-gpu | qcow2 | 1508376576 | active |

+--------------------------------------+---------------+-------------+------------+--------+

Image "bm-deploy-kernel" is up-to-date, skipping.

Image "bm-deploy-ramdisk" is up-to-date, skipping.

Image file "/var/lib/ironic/httpboot/agent.kernel" is up-to-date, skipping.

Image file "/var/lib/ironic/httpboot/agent.ramdisk" is up-to-date, skipping.

(undercloud) [stack@lab-director x86_64]$ openstack image list

+--------------------------------------+------------------------+--------+

| ID | Name | Status |

+--------------------------------------+------------------------+--------+

| f1b189e3-d3a0-4b33-acad-81f01ade39f4 | bm-deploy-kernel | active |

| f8c47730-5fa7-459e-b3ec-95c4d2c792ae | bm-deploy-ramdisk | active |

| ee868592-8a69-4636-9c02-92cd56805de0 | overcloud-full | active |

| d16fce37-fab6-4eb7-b19a-4b3f572e0214 | overcloud-full-initrd | active |

| 216aea4a-912a-42a2-9cab-441d289f4fb6 | overcloud-full-vmlinuz | active |

| 3c59f45e-5bdf-466f-b0d0-1c68a1afbce6 | overcloud-gpu | active |

| a25fee19-85c3-4994-b2a9-3269f0cda2fe | overcloud-gpu-initrd | active |

| 4d6be38e-2a53-4fd0-a6c9-98aef0549363 | overcloud-gpu-vmlinuz | active |

+--------------------------------------+------------------------+--------+

Copy default roles templates:

(undercloud) [stack@lab-director ~]$ cp -r /usr/share/openstack-tripleo-heat-templates/roles ~/templates

Create the new ComputeGPU role file:

(undercloud) [stack@lab-director ~]$ cp /home/stack/templates/roles/Compute.yaml ~/templates/roles/ComputeGPU.yaml

Update the new ComputeGPU role file:

(undercloud) [stack@lab-director ~]$ sed -i s/Role:\ Compute/Role:\ ComputeGpu/g ~/templates/roles/ComputeGpu.yaml

(undercloud) [stack@lab-director ~]$ sed -i s/name:\ Compute/name:\ ComputeGpu/g ~/templates/roles/ComputeGpu.yaml

(undercloud) [stack@lab-director ~]$ sed -i s/Basic\ Compute\ Node\ role/GPU\ Compute\ Node\ role/g ~/templates/roles/ComputeGpu.yaml

(undercloud) [stack@lab-director ~]$ sed -i '/CountDefault:\ 1/a \ \ ImageDefault: overcloud-gpu' ~/templates/roles/ComputeGpu.yaml

(undercloud) [stack@lab-director ~]$ sed -i s/-compute-/-computegpu-/g /home/stack/templates/roles/ComputeGpu.yaml

(undercloud) [stack@lab-director ~]$ sed -i s/compute\.yaml/compute-gpu\.yaml/g ~/templates/roles/ComputeGpu.yaml

Generate the NVIDIA GPU role:

(undercloud) [stack@lab-director ~]$ openstack overcloud roles generate --roles-path /home/stack/templates/roles -o /home/stack/templates/gpu_roles_data.yaml Controller Compute ComputeGpu

Check the role template generated:

(undercloud) [stack@lab-director ~]$ cat /home/stack/templates/gpu_roles_data.yaml | grep -i gpu

# Role: ComputeGPU #

- name: ComputeGPU

GPU Compute Node role

HostnameFormatDefault: '%stackname%-computegpu-%index%'

List bare metal nodes:

(undercloud) [stack@lab-director ~]$ openstack baremetal node list

+--------------------------------------+--------------------+---------------+-------------+--------------------+-------------+

| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |

+--------------------------------------+--------------------+---------------+-------------+--------------------+-------------+

| 6031ff6f-4e90-4ec0-8ec6-0a360881dac7 | lab-controller | None | power off | available | False |

| 5fa3d40c-29b5-4540-85c9-e76ee5cb6c03 | lab-compute | None | power off | available | False |

+--------------------------------------+--------------------+---------------+-------------+--------------------+-------------+

(undercloud) [stack@lab-director templates]$ openstack baremetal node set --property capabilities='profile:compute-gpu,boot_option:local' lab-compute

(undercloud) [stack@lab-director templates]$ openstack overcloud profiles list

+--------------------------------------+--------------------+-----------------+-----------------+-------------------+

| Node UUID | Node Name | Provision State | Current Profile | Possible Profiles |

+--------------------------------------+--------------------+-----------------+-----------------+-------------------+

| 6031ff6f-4e90-4ec0-8ec6-0a360881dac7 | lab-controller | available | control | |

| 5fa3d40c-29b5-4540-85c9-e76ee5cb6c03 | lab-compute | available | compute-gpu | |

+--------------------------------------+--------------------+-----------------+-----------------+-------------------+

Create a GPU flavor:

(undercloud) [stack@lab-director templates]$ openstack flavor create --id auto --ram 6144 --disk 40 --vcpus 4 compute-gpu

+----------------------------+--------------------------------------+

| Field | Value |

+----------------------------+--------------------------------------+

| OS-FLV-DISABLED:disabled | False |

| OS-FLV-EXT-DATA:ephemeral | 0 |

| disk | 40 |

| id | b098aaf1-f32b-458f-b4bd-4f48dba27a7a |

| name | compute-gpu |

| os-flavor-access:is_public | True |

| properties | |

| ram | 6144 |

| rxtx_factor | 1.0 |

| swap | |

| vcpus | 4 |

+----------------------------+--------------------------------------+

(undercloud) [stack@lab-director ~]$ openstack flavor list

+--------------------------------------+---------------+------+------+-----------+-------+-----------+

| ID | Name | RAM | Disk | Ephemeral | VCPUs | Is Public |

+--------------------------------------+---------------+------+------+-----------+-------+-----------+

| 0372c486-0983-43ee-97ca-6ec5cc7c6065 | block-storage | 4096 | 40 | 0 | 1 | True |

| 18baee14-3a48-4c11-90f3-9b280c03dba4 | ceph-storage | 4096 | 40 | 0 | 1 | True |

| 5b750b0f-f3ac-473c-ac81-078f81d525bb | baremetal | 4096 | 40 | 0 | 1 | True |

| 6893f4c3-0129-4b0b-a641-0cca91f07e3a | control | 4096 | 40 | 0 | 1 | True |

| afbf6532-ca93-4529-a20f-71c2e0232667 | swift-storage | 4096 | 40 | 0 | 1 | True |

| b098aaf1-f32b-458f-b4bd-4f48dba27a7a | compute-gpu | 6144 | 40 | 0 | 4 | True |

| be857621-558c-4908-8939-fe6df4b0f4cf | compute | 4096 | 40 | 0 | 1 | True |

+--------------------------------------+---------------+------+------+-----------+-------+-----------+

Introspection

Prepare instackenv.json:

[stack@lab-director ~]$ cat << EOF > ~/instackenv.json

{

"nodes": [

{

"capabilities": "profile:control",

"name": "lab-controller",

"pm_user": "admin",

"pm_password": "XXXXXXXX",

"pm_port": "6230",

"pm_addr": "192.168.122.1",

"pm_type": "pxe_ipmitool",

"mac": [

"52:54:00:f9:aa:aa"

]

},

{

"capabilities": "profile:compute",

"name": "lab-compute",

"pm_user": "root",

"pm_password": "XXXXXXXXX",

"pm_addr": "10.19.152.193",

"pm_type": "pxe_ipmitool",

"mac": [

"18:66:da:fc:aa:aa"

]

}

]

}

EOF

Import baremetal node information into Ironic database:

(undercloud) [stack@lab-director ~]$ openstack overcloud node import ~/instackenv.json

Waiting for messages on queue 'tripleo' with no timeout.

2 node(s) successfully moved to the "manageable" state.

Successfully registered node UUID 6031ff6f-4e90-4ec0-8ec6-0a360881dac7

Successfully registered node UUID 5fa3d40c-29b5-4540-85c9-e76ee5cb6c03

List bare metal nodes:

(undercloud) [stack@lab-director ~]$ openstack baremetal node list

+--------------------------------------+--------------------+---------------+-------------+--------------------+-------------+

| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |

+--------------------------------------+--------------------+---------------+-------------+--------------------+-------------+

| 6031ff6f-4e90-4ec0-8ec6-0a360881dac7 | lab-controller | None | power off | manageable | False |

| 5fa3d40c-29b5-4540-85c9-e76ee5cb6c03 | lab-compute | None | power off | manageable | False |

+--------------------------------------+--------------------+---------------+-------------+--------------------+-------------+

Launch introspection:

(undercloud) [stack@lab-director ~]$ openstack overcloud node introspect --all-manageable --provide

Waiting for introspection to finish...

Waiting for messages on queue 'tripleo' with no timeout.

Introspection of node 6031ff6f-4e90-4ec0-8ec6-0a360881dac7 completed. Status:SUCCESS. Errors:None

Introspection of node 5fa3d40c-29b5-4540-85c9-e76ee5cb6c03 completed. Status:SUCCESS. Errors:None

Successfully introspected 2 node(s).

Introspection completed.

Waiting for messages on queue 'tripleo' with no timeout.

2 node(s) successfully moved to the "available" state.

Introspection done, check bare metal status:

(undercloud) [stack@lab-director ~]$ openstack baremetal node list

+--------------------------------------+--------------------+---------------+-------------+--------------------+-------------+

| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |

+--------------------------------------+--------------------+---------------+-------------+--------------------+-------------+

| 6031ff6f-4e90-4ec0-8ec6-0a360881dac7 | lab-controller | None | power off | available | False |

| 5fa3d40c-29b5-4540-85c9-e76ee5cb6c03 | lab-compute | None | power off | available | False |

+--------------------------------------+--------------------+---------------+-------------+--------------------+-------------+

(undercloud) [stack@lab-director ~]$ openstack baremetal introspection list

+--------------------------------------+---------------------+---------------------+-------+

| UUID | Started at | Finished at | Error |

+--------------------------------------+---------------------+---------------------+-------+

| 6031ff6f-4e90-4ec0-8ec6-0a360881dac7 | 2019-01-16T22:28:51 | 2019-01-16T22:30:49 | None |

| 5fa3d40c-29b5-4540-85c9-e76ee5cb6c03 | 2019-01-16T22:28:51 | 2019-01-16T22:35:01 | None |

+--------------------------------------+---------------------+---------------------+-------+

Check introspection:

(undercloud) [stack@lab-director ~]$ openstack baremetal introspection interface list lab-controller

+-----------+-------------------+----------------------+-------------------+----------------+

| Interface | MAC Address | Switch Port VLAN IDs | Switch Chassis ID | Switch Port ID |

+-----------+-------------------+----------------------+-------------------+----------------+

| eth0 | 52:54:00:f9:d1:7a | [] | None | None |

+-----------+-------------------+----------------------+-------------------+----------------+

(undercloud) [stack@lab-director ~]$ openstack baremetal introspection interface list lab-compute

+-----------+-------------------+----------------------+-------------------+----------------+

| Interface | MAC Address | Switch Port VLAN IDs | Switch Chassis ID | Switch Port ID |

+-----------+-------------------+----------------------+-------------------+----------------+

| p2p1 | 3c:fd:fe:1f:bc:80 | [] | None | None |

| em4 | 18:66:da:fc:9c:2f | [] | None | None |

| p2p2 | 3c:fd:fe:1f:bc:82 | [] | None | None |

| em3 | 18:66:da:fc:9c:2e | [] | None | None |

| em2 | 18:66:da:fc:9c:2d | [] | None | None |

| em1 | 18:66:da:fc:9c:2c | [179] | 08:81:f4:a7:5f:00 | ge-1/0/35 |

+-----------+-------------------+----------------------+-------------------+----------------+

Prepare templates

Prepare deploy script:

(undercloud) [stack@lab-director ~]$ cat << EOF > ~/overcloud-deploy.sh

#!/bin/bash

time openstack overcloud deploy \

--templates /usr/share/openstack-tripleo-heat-templates \

-r /home/stack/templates/gpu_roles_data.yaml \

-e /home/stack/templates/node-info.yaml \

-e /home/stack/templates/gpu.yaml \

-e /home/stack/templates/custom-domain.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \

-e /home/stack/templates/network-environment.yaml \

-e /home/stack/containers-prepare-parameter.yaml \

--timeout 60

EOF

To determine the vGPU type for your physical GPU device, you need to check the device type available from a machine. You can perform these steps by booting a live Linux.

[root@host1 ~]# ls /sys/class/mdev_bus/0000\:06\:00.0/mdev_supported_types/

nvidia-11 nvidia-12 nvidia-13 nvidia-14 nvidia-15 nvidia-16 nvidia-17 nvidia-18 nvidia-19 nvidia-20 nvidia-21 nvidia-210 nvidia-22

[root@host1 ~]# cat /sys/class/mdev_bus/0000\:06\:00.0/mdev_supported_types/nvidia-18/description

num_heads=4, frl_config=60, framebuffer=2048M, max_resolution=4096x2160, max_instance=4

NVIDIA types available for M60, detail of nvidia-m35 (512M):

[root@host10 ~]# ls /sys/class/mdev_bus/0000\:84\:00.0/mdev_supported_types/

nvidia-155 nvidia-208 nvidia-35 nvidia-36 nvidia-37 nvidia-38 nvidia-39 nvidia-40 nvidia-41 nvidia-42 nvidia-43 nvidia-44 nvidia-4

[root@overcloud-computegpu-0 heat-admin]# cat /sys/class/mdev_bus/0000\:84\:00.0/mdev_supported_types/nvidia-35/description

num_heads=2, frl_config=45, framebuffer=512M, max_resolution=2560x1600, max_instance=16

NVIDIA types available for M10, detail of nvidia-m18 (2G):

[root@host10 ~]# ls /sys/class/mdev_bus/0000\:06\:00.0/mdev_supported_types/

nvidia-11 nvidia-12 nvidia-13 nvidia-14 nvidia-15 nvidia-16 nvidia-17 nvidia-18 nvidia-19 nvidia-20 nvidia-21 nvidia-210 nvidia-22

[root@overcloud-computegpu-0 heat-admin]# cat /sys/class/mdev_bus/0000\:84\:00.0/mdev_supported_types/nvidia-43/description

num_heads=4, frl_config=60, framebuffer=4096M, max_resolution=4096x2160, max_instance=2

Create GPU customization template:

(undercloud) [stack@lab-director templates]$ cat << EOF > ~/templates/gpu.yaml

parameter_defaults:

ComputeExtraConfig:

nova::compute::vgpu::enabled_vgpu_types:

- nvidia-m35

Prepare network template:

(undercloud) [stack@lab-director ~]$ cat << EOF > ~/templates/network-environment.yaml

resource_registry:

OS::TripleO::Compute::Net::SoftwareConfig: /home/stack/templates/nic-configs/compute.yaml

OS::TripleO::ComputeGpu::Net::SoftwareConfig: /home/stack/templates/nic-configs/compute-gpu.yaml

OS::TripleO::Controller::Net::SoftwareConfig: /home/stack/templates/nic-configs/controller.yaml

#OS::TripleO::AllNodes::Validation: OS::Heat::None

parameter_defaults:

# This sets 'external_network_bridge' in l3_agent.ini to an empty string

# so that external networks act like provider bridge networks (they

# will plug into br-int instead of br-ex)

NeutronExternalNetworkBridge: "''"

# Internal API used for private OpenStack Traffic

InternalApiNetCidr: 172.16.2.0/24

InternalApiAllocationPools: [{'start': '172.16.2.50', 'end': '172.16.2.100'}]

InternalApiNetworkVlanID: 102

# Tenant Network Traffic - will be used for VXLAN over VLAN

TenantNetCidr: 172.16.3.0/24

TenantAllocationPools: [{'start': '172.16.3.50', 'end': '172.16.3.100'}]

TenantNetworkVlanID: 103

# Public Storage Access - e.g. Nova/Glance <--> Ceph

StorageNetCidr: 172.16.4.0/24

StorageAllocationPools: [{'start': '172.16.4.50', 'end': '172.16.4.100'}]

StorageNetworkVlanID: 104

# Private Storage Access - i.e. Ceph background cluster/replication

StorageMgmtNetCidr: 172.16.5.0/24

StorageMgmtAllocationPools: [{'start': '172.16.5.50', 'end': '172.16.5.100'}]

StorageMgmtNetworkVlanID: 105

# External Networking Access - Public API Access

ExternalNetCidr: 172.16.0.0/24

# Leave room for floating IPs in the External allocation pool (if required)

ExternalAllocationPools: [{'start': '172.16.0.50', 'end': '172.16.0.250'}]

# Set to the router gateway on the external network

ExternalInterfaceDefaultRoute: 172.16.0.1

ExternalNetworkVlanID: 101

# Add in configuration for the Control Plane

ControlPlaneSubnetCidr: "24"

ControlPlaneDefaultRoute: 172.16.16.10

EC2MetadataIp: 172.16.16.10

DnsServers: ['10.16.36.29']

EOF

Prepare node information template:

(undercloud) [stack@lab-director templates]$ cat << EOF > ~/templates/node-info.yaml

parameter_defaults:

OvercloudControllerFlavor: control

OvercloudComputeFlavor: compute

OvercloudComputeGpuFlavor: compute-gpu

ControllerCount: 1

ComputeCount: 0

ComputeGpuCount: 1

NtpServer: 'clock.redhat.com'

NeutronNetworkType: 'vxlan,vlan'

NeutronTunnelTypes: 'vxlan'

EOF

Prepare nic-configs template files based on documentation.

Deploy

Check status before deployment:

(undercloud) [stack@lab-director ~]$ openstack baremetal node list

+--------------------------------------+--------------------+---------------+-------------+--------------------+-------------+

| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |

+--------------------------------------+--------------------+---------------+-------------+--------------------+-------------+

| 6031ff6f-4e90-4ec0-8ec6-0a360881dac7 | lab-controller | None | power off | available | False |

| 5fa3d40c-29b5-4540-85c9-e76ee5cb6c03 | lab-compute | None | power off | available | False |

+--------------------------------------+--------------------+---------------+-------------+--------------------+-------------+

(undercloud) [stack@lab-director ~]$ openstack overcloud profiles list

+--------------------------------------+--------------------+-----------------+-----------------+-------------------+

| Node UUID | Node Name | Provision State | Current Profile | Possible Profiles |

+--------------------------------------+--------------------+-----------------+-----------------+-------------------+

| 6031ff6f-4e90-4ec0-8ec6-0a360881dac7 | lab-controller | available | control | |

| 5fa3d40c-29b5-4540-85c9-e76ee5cb6c03 | lab-compute | available | compute-gpu | |

+--------------------------------------+--------------------+-----------------+-----------------+-------------------+

(undercloud) [stack@lab-director ~]$ openstack stack list

(undercloud) [stack@lab-director ~]$ openstack server list

Deploy:

(undercloud) [stack@lab-director ~]$ ./overcloud-deploy.sh

...

real 57m50.873s

user 0m7.347s

sys 0m0.794s

During the deployment, a watch command can be launched in a second console to monitor the progress:

(undercloud) [stack@lab-director ~]$ watch "openstack server list;openstack baremetal node list;openstack stack list"

Check deployment done

List bare metal nodes:

(undercloud) [stack@lab-director ~]$ openstack server list

+--------------------------------------+------------------------+--------+------------------------+----------------+-------------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+------------------------+--------+------------------------+----------------+-------------+

| 94928e09-4b49-484c-a41c-1e5d7fb5ff6d | overcloud-computegpu-0 | ACTIVE | ctlplane=172.16.16.216 | overcloud-gpu | compute-gpu |

| ab1f84a3-b221-44d1-a97f-e3360774f4f3 | overcloud-controller-0 | ACTIVE | ctlplane=172.16.16.203 | overcloud-full | control |

+--------------------------------------+------------------------+--------+------------------------+----------------+-------------+

Check nova hypervisor registered into Keystone:

[stack@lab-director ~]$ source overcloudrc

(overcloud) [stack@lab-director ~]$ openstack hypervisor list

+----+---------------------------------------+-----------------+-------------+-------+

| ID | Hypervisor Hostname | Hypervisor Type | Host IP | State |

+----+---------------------------------------+-----------------+-------------+-------+

| 1 | overcloud-computegpu-0.lan.redhat.com | QEMU | 172.16.2.51 | up |

+----+---------------------------------------+-----------------+-------------+-------+

Verify that the NVIDIA vGPU software package is installed and loaded correctly by checking for the VFIO drivers in the list of kernel loaded modules:

[heat-admin@overcloud-computegpu-0 ~]$ lsmod | grep vfio

nvidia_vgpu_vfio 49475 0

nvidia 16633974 10 nvidia_vgpu_vfio

vfio_mdev 12841 0

mdev 20336 2 vfio_mdev,nvidia_vgpu_vfio

vfio_iommu_type1 22300 0

vfio 32656 3 vfio_mdev,nvidia_vgpu_vfio,vfio_iommu_type1

Like expected, the nouveau module is not loaded:

[root@overcloud-computegpu-0 ~]# lsmod | grep nouveau

[root@overcloud-computegpu-0 ~]#

Obtain the PCI device bus/device/function (BDF) of the physical GPU:

[heat-admin@overcloud-computegpu-0 ~]$ lspci | grep NVIDIA

06:00.0 VGA compatible controller: NVIDIA Corporation GM204GL [Tesla M60] (rev a1)

07:00.0 VGA compatible controller: NVIDIA Corporation GM204GL [Tesla M60] (rev a1)

84:00.0 VGA compatible controller: NVIDIA Corporation GM107GL [Tesla M10] (rev a2)

85:00.0 VGA compatible controller: NVIDIA Corporation GM107GL [Tesla M10] (rev a2)

86:00.0 VGA compatible controller: NVIDIA Corporation GM107GL [Tesla M10] (rev a2)

87:00.0 VGA compatible controller: NVIDIA Corporation GM107GL [Tesla M10] (rev a2)

Check NVIDIA driver status:

[root@overcloud-computegpu-0 sysconfig]# nvidia-smi

Wed Oct 31 04:09:58 2018

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 410.68 Driver Version: 410.68 CUDA Version: N/A |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla M60 On | 00000000:06:00.0 Off | Off |

| N/A 32C P8 23W / 150W | 14MiB / 8191MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 1 Tesla M60 On | 00000000:07:00.0 Off | Off |

| N/A 31C P8 23W / 150W | 14MiB / 8191MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 2 Tesla M10 On | 00000000:84:00.0 Off | N/A |

| N/A 27C P8 10W / 53W | 11MiB / 8191MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 3 Tesla M10 On | 00000000:85:00.0 Off | N/A |

| N/A 27C P8 10W / 53W | 11MiB / 8191MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 4 Tesla M10 On | 00000000:86:00.0 Off | N/A |

| N/A 27C P8 10W / 53W | 11MiB / 8191MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 5 Tesla M10 On | 00000000:87:00.0 Off | N/A |

| N/A 27C P8 10W / 53W | 11MiB / 8191MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

Check configuration applied by director deployment:

[root@overcloud-computegpu-0 heat-admin]# cat /var/lib/config-data/puppet-generated/nova_libvirt/etc/nova/nova.conf | grep -i nvidia

# Some pGPUs (e.g. NVIDIA GRID K1) support different vGPU types. User can use

# enabled_vgpu_types = GRID K100,Intel GVT-g,MxGPU.2,nvidia-11

enabled_vgpu_types=nvidia-43

Check NVIDIA manager service status:

[root@overcloud-computegpu-0 heat-admin]# systemctl status nvidia-vgpu-mgr

● nvidia-vgpu-mgr.service - NVIDIA vGPU Manager Daemon

Loaded: loaded (/usr/lib/systemd/system/nvidia-vgpu-mgr.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2019-01-18 14:26:03 UTC; 5h 50min ago

Main PID: 10409 (nvidia-vgpu-mgr)

Tasks: 1

Memory: 220.0K

CGroup: /system.slice/nvidia-vgpu-mgr.service

└─10409 /usr/bin/nvidia-vgpu-mgr

Jan 18 14:26:01 localhost.localdomain systemd[1]: Starting NVIDIA vGPU Manager Daemon...

Jan 18 14:26:03 localhost.localdomain systemd[1]: Started NVIDIA vGPU Manager Daemon.

Jan 18 14:26:09 overcloud-computegpu-0 nvidia-vgpu-mgr[10409]: notice: vgpu_mgr_log: nvidia-vgpu-mgr daemon started

Initialize testing tenant

Prepare a script to initialize the tenant and image:

[stack@lab-director ~]$ cat << EOF > overcloud-prepare.sh

#!/bin/bash

source /home/stack/overcloudrc

openstack network create --external --share --provider-physical-network datacentre --provider-network-type vlan --provider-segment 101 external

openstack subnet create external --gateway 172.16.0.1 --allocation-pool start=172.16.0.20,end=172.16.0.49 --no-dhcp --network external --subnet-range 172.16.0.0/24

openstack router create router0

neutron router-gateway-set router0 external

openstack network create internal0

openstack subnet create subnet0 --network internal0 --subnet-range 172.31.0.0/24 --dns-nameserver 10.19.43.29

openstack router add subnet router0 subnet0

curl "https://launchpad.net/~egallen/+sshkeys" -o ~/.ssh/id_rsa_lambda.pub

openstack keypair create --public-key ~/.ssh/id_rsa_lambda.pub lambda

openstack flavor create --ram 1024 --disk 40 --vcpus 2 m1.small

curl http://download.cirros-cloud.net/0.3.5/cirros-0.3.5-x86_64-disk.img -o /var/images/x86_64/cirros-0.3.5-x86_64-disk.img

openstack image create cirros035 --file /var/images/x86_64/cirros-0.3.5-x86_64-disk.img --disk-format qcow2 --container-format bare --public

openstack floating ip create external

openstack floating ip create external

openstack floating ip create external

openstack floating ip create external

openstack security group create web --description "Web servers"

openstack security group rule create --protocol icmp web

openstack security group rule create --protocol tcp --dst-port 22:22 --src-ip 0.0.0.0/0 web

openstack server create --flavor m1.small --image cirros035 --security-group web --nic net-id=internal0 --key-name lambda instance0

FLOATING_IP_ID=\$( openstack floating ip list -f value -c ID --status 'DOWN' | head -n 1 )

openstack server add floating ip instance0 \$FLOATING_IP_ID

openstack server list

echo "Type: openstack server ssh --login cirros instance0"

EOF

Prepare the tenant:

[stack@lab-director ~]$ source ~/overcloudrc

(overcloud) [stack@lab-director ~]$ ./overcloud-prepare.sh

+--------------------------------------+-----------+--------+-----------------------------------+-----------+----------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+-----------+--------+-----------------------------------+-----------+----------+

| 83974e70-63ad-4887-b8c7-e5c068a2a740 | instance0 | ACTIVE | internal0=172.31.0.4, 172.16.0.26 | cirros035 | m1.small |

+--------------------------------------+-----------+--------+-----------------------------------+-----------+----------+

(overcloud) [stack@lab-director ~]$ openstack server ssh instance0 --login cirros

The authenticity of host '172.16.0.26 (172.16.0.26)' can't be established.

RSA key fingerprint is SHA256:dl+U9Ky5xnaWZCcAjmgyTQ/e230qog/Z2gylPaMX2X0.

RSA key fingerprint is MD5:8e:bf:c4:52:e2:97:2d:31:89:d9:25:7b:9e:13:d4:76.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '172.16.0.26' (RSA) to the list of known hosts.

cirros@172.16.0.26's password:

$ uptime

20:31:31 up 1 min, 1 users, load average: 0.00, 0.00, 0.00

Delete the instance:

(overcloud) [stack@lab-director ~]$ openstack server delete instance0

Prepare guest image with GRID

Create a NVIDIA licence file:

[stack@lab-director x86_64]$ mkdir -p /var/images/x86_64/nvidia-guest/

[stack@lab-director x86_64]$ cat << EOF > /var/images/x86_64/nvidia-guest/gridd.conf

# /etc/nvidia/gridd.conf.template - Configuration file for NVIDIA Grid Daemon

# This is a template for the configuration file for NVIDIA Grid Daemon.

# For details on the file format, please refer to the nvidia-gridd(1)

# man page.

# Description: Set License Server Address

# Data type: string

# Format: "<address>"

ServerAddress=dhcp158-15.virt.lab.eng.bos.redhat.com

# Description: Set License Server port number

# Data type: integer

# Format: <port>, default is 7070

ServerPort=7070

# Description: Set Backup License Server Address

# Data type: string

# Format: "<address>"

#BackupServerAddress=

# Description: Set Backup License Server port number

# Data type: integer

# Format: <port>, default is 7070

#BackupServerPort=

# Description: Set Feature to be enabled

# Data type: integer

# Possible values:

# 0 => for unlicensed state

# 1 => for GRID vGPU

# 2 => for Quadro Virtual Datacenter Workstation

FeatureType=2

# Description: Parameter to enable or disable Grid Licensing tab in nvidia-settings

# Data type: boolean

# Possible values: TRUE or FALSE, default is FALSE

EnableUI=TRUE

# Description: Set license borrow period in minutes

# Data type: integer

# Possible values: 10 to 10080 mins(7 days), default is 1440 mins(1 day)

#LicenseInterval=1440

# Description: Set license linger period in minutes

# Data type: integer

# Possible values: 0 to 10080 mins(7 days), default is 0 mins

#LingerInterval=10

EOF

Prepare iso file with driver script from NVIDIA:

[root@lab607 guest]# genisoimage -o nvidia-guest.iso -R -J -V NVIDIA-GUEST /var/images/x86_64/nvidia-guest/

I: -input-charset not specified, using utf-8 (detected in locale settings)

9.06% done, estimate finish Mon Jan 21 05:09:34 2019

18.08% done, estimate finish Mon Jan 21 05:09:34 2019

27.14% done, estimate finish Mon Jan 21 05:09:34 2019

36.17% done, estimate finish Mon Jan 21 05:09:34 2019

45.22% done, estimate finish Mon Jan 21 05:09:34 2019

54.25% done, estimate finish Mon Jan 21 05:09:34 2019

63.31% done, estimate finish Mon Jan 21 05:09:34 2019

72.34% done, estimate finish Mon Jan 21 05:09:34 2019

81.39% done, estimate finish Mon Jan 21 05:09:34 2019

90.42% done, estimate finish Mon Jan 21 05:09:34 2019

99.48% done, estimate finish Mon Jan 21 05:09:34 2019

Total translation table size: 0

Total rockridge attributes bytes: 358

Total directory bytes: 0

Path table size(bytes): 10

Max brk space used 0

55297 extents written (108 MB)

Prepare customization script step1:

[root@lab607 guest]# cat << EOF > nvidia-guest_step1.sh

#/bin/bash

# Remove nouveau driver

if grep -Fxq "modprobe.blacklist=nouveau" /etc/default/grub

then

echo "Nothing to do for Nouveau driver"

else

# Nouveau driver not removed

# To fix https://bugzilla.redhat.com/show_bug.cgi?id=1646871

sed -i 's/crashkernel=auto\"\ /crashkernel=auto\ /' /etc/default/grub

sed -i 's/net\.ifnames=0$/net.ifnames=0\"/' /etc/default/grub

# Remove Nouveau

sed -i 's/GRUB_CMDLINE_LINUX="[^"]*/& modprobe.blacklist=nouveau/' /etc/default/grub

echo ">>>> /etc/default/grub:"

cat /etc/default/grub | grep nouveau

grub2-mkconfig -o /boot/grub2/grub.cfg

fi

# Add build tooling

subscription-manager register --username myrhnaccount --password XXXXXXX

subscription-manager attach --pool=XXXXXXXXXXXXXXXXXXXXXXXXXX

subscription-manager repos --disable=*

subscription-manager repos --enable=rhel-7-server-rpms --enable=rhel-7-server-extras-rpms

yum upgrade -y

yum install -y gcc make kernel-devel cpp glibc-devel glibc-headers kernel-headers libmpc mpfr

EOF

Prepare customization script step2:

[stack@lab-director x86-64]$ cat << EOF > nvidia-guest_step2.sh

#/bin/bash

# NVIDIA GRID guest script

mkdir /tmp/mount

mount LABEL=NVIDIA-GUEST /tmp/mount

/bin/sh /tmp/mount/NVIDIA-Linux-x86_64-410.71-grid.run -s --kernel-source-path /usr/src/kernels/3.10.0-957.1.3.el7.x86_64

mkdir -p /etc/nvidia

cp /tmp/mount/gridd.conf /etc/nvidia

EOF

Apply customization:

[stack@lab-director x86-64]$ cp rhel-server-7.6-x86_64-kvm.qcow2 rhel-server-7.6-x86_64-kvm-guest.qcow2

[stack@lab-director x86-64]$ sudo virt-customize -a rhel-server-7.6-x86_64-kvm-guest.qcow2 --root-password password:XXXXXXXX

[ 0.0] Examining the guest ...

[ 2.8] Setting a random seed

[ 2.8] Setting the machine ID in /etc/machine-id

[ 2.8] Setting passwords

[ 4.3] Finishing off

[stack@lab-director x86-64]$ time sudo virt-customize --memsize 8192 --attach nvidia-guest.iso -a rhel-server-7.6-x86_64-kvm-guest.qcow2 -v --run nvidia-guest_step1.sh

real 4m32.928s

user 0m0.128s

sys 0m0.159s

[stack@lab-director x86-64]$ time sudo virt-customize -a rhel-server-7.6-x86_64-kvm-guest.qcow2 --selinux-relabel

real 0m18.080s

user 0m0.083s

sys 0m0.064s

[stack@lab-director x86-64]$ time sudo virt-customize --memsize 8192 --attach nvidia-guest.iso -a rhel-server-7.6-x86_64-kvm-guest.qcow2 -v --run nvidia-guest_step2.sh

real 0m18.898s

user 0m0.123s

sys 0m0.152s

[stack@lab-director x86-64]$ time sudo virt-customize -a rhel-server-7.6-x86_64-kvm-guest.qcow2 --selinux-relabel

Upload image into Glance:

(overcloud) [stack@lab-director tmp]$ openstack image create rhel76vgpu --file /tmp/rhel-server-7.6-x86_64-kvm-guest.qcow2 --disk-format qcow2 --container-format bare --public

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| checksum | 9c4bd93ad42a9f67501218a765be8c58 |

| container_format | bare |

| created_at | 2019-01-22T13:20:49Z |

| disk_format | qcow2 |

| file | /v2/images/13b8fb20-6c84-4b8b-83c9-df7a88b29e7b/file |

| id | 13b8fb20-6c84-4b8b-83c9-df7a88b29e7b |

| min_disk | 0 |

| min_ram | 0 |

| name | rhel76vgpu |

| owner | 83458e214cb14e388208207ac42a701d |

| properties | direct_url='swift+config://ref1/glance/13b8fb20-6c84-4b8b-83c9-df7a88b29e7b', os_hash_algo='sha512', os_hash_value='a7d243ea7fdce2e0a43e1157aea030048d3ea0a8a1a09e7347412663e173bf80714f1124e1e8d8885127aa71a87a041dd3cffafea731aa012a5a05c2c953f406', os_hidden='False' |

| protected | False |

| schema | /v2/schemas/image |

| size | 2030567424 |

| status | active |

| tags | |

| updated_at | 2019-01-22T13:21:15Z |

| virtual_size | None |

| visibility | public |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

Create NVIDIA GPU flavor:

(overcloud) [stack@lab-director ~]$ openstack flavor create --vcpus 6 --ram 8192 --disk 100 m1.small-gpu

+----------------------------+--------------------------------------+

| Field | Value |

+----------------------------+--------------------------------------+

| OS-FLV-DISABLED:disabled | False |

| OS-FLV-EXT-DATA:ephemeral | 0 |

| disk | 100 |

| id | 34576fcf-b2a8-4505-832f-6f9c393d2b34 |

| name | m1.small-gpu |

| os-flavor-access:is_public | True |

| properties | |

| ram | 8192 |

| rxtx_factor | 1.0 |

| swap | |

| vcpus | 6 |

+----------------------------+--------------------------------------+

Customize GPU flavor:

(overcloud) [stack@lab-director ~]$ openstack flavor set m1.small-gpu --property "resources:VGPU=1"

(overcloud) [stack@lab-director ~]$ openstack flavor show m1.small-gpu

+----------------------------+--------------------------------------+

| Field | Value |

+----------------------------+--------------------------------------+

| OS-FLV-DISABLED:disabled | False |

| OS-FLV-EXT-DATA:ephemeral | 0 |

| access_project_ids | None |

| disk | 100 |

| id | 34576fcf-b2a8-4505-832f-6f9c393d2b34 |

| name | m1.small-gpu |

| os-flavor-access:is_public | True |

| properties | resources:VGPU='1' |

| ram | 8192 |

| rxtx_factor | 1.0 |

| swap | |

| vcpus | 6 |

+----------------------------+--------------------------------------+

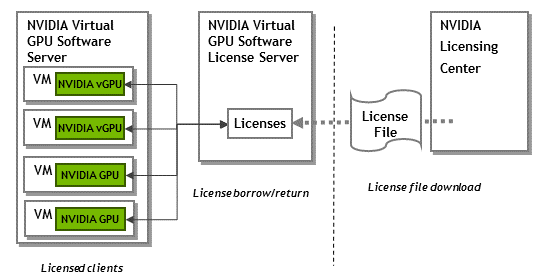

Virtual GPU License Server

NVIDIA vGPU software is a licensed product. Licensed vGPU functionalities are activated during guest OS boot by the acquisition of a software license served over the network from an NVIDIA vGPU software license server.

To manage pool of floating licenses to NVIDIA vGPU software licensed products, you need to install a Virtual GPU License Server, more information here: https://docs.nvidia.com/grid/latest/grid-license-server-user-guide/index.html

After the installation, make a tunnel to access to the NVIDIA licence server with on your laptop:

[egallen@my-desktop ~]$ ssh -N -L 8080:localhost:8080 root@my-nvidia-licence-server.redhat.com

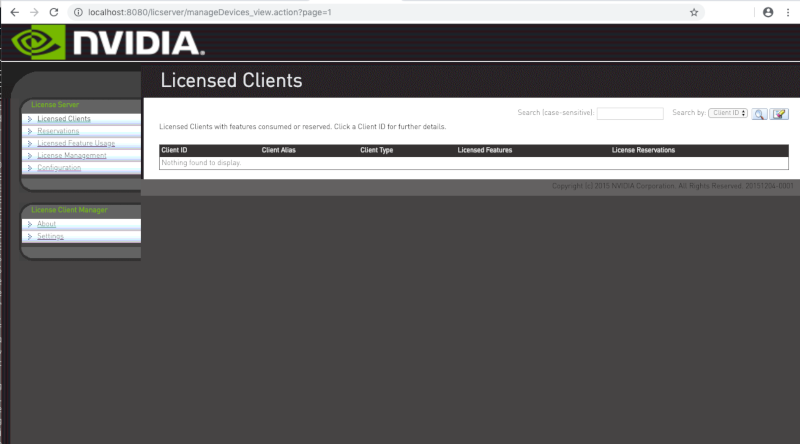

Check the NVIDIA licence server before on http://localhost:8080/licserver/:

No instance registred before the boot:

Test a vGPU instance

Create an instance with vGPU:

(overcloud) [stack@lab-director ~]$ openstack server create --flavor m1.small-gpu --image rhel76vgpu --security-group web --nic net-id=internal0 --key-name lambda instance0

+-------------------------------------+-----------------------------------------------------+

| Field | Value |

+-------------------------------------+-----------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | |

| OS-EXT-SRV-ATTR:host | None |

| OS-EXT-SRV-ATTR:hypervisor_hostname | None |

| OS-EXT-SRV-ATTR:instance_name | |

| OS-EXT-STS:power_state | NOSTATE |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | None |

| OS-SRV-USG:terminated_at | None |

| accessIPv4 | |

| accessIPv6 | |

| addresses | |

| adminPass | bEY8Tx7UxjkX |

| config_drive | |

| created | 2019-01-22T13:22:21Z |

| flavor | m1.small-gpu (34576fcf-b2a8-4505-832f-6f9c393d2b34) |

| hostId | |

| id | 2a6a9ae7-a630-431a-8cb9-42a57abb44a4 |

| image | rhel76vgpu (13b8fb20-6c84-4b8b-83c9-df7a88b29e7b) |

| key_name | lambda |

| name | instance0 |

| progress | 0 |

| project_id | 83458e214cb14e388208207ac42a701d |

| properties | |

| security_groups | name='fd72c7f2-e6c0-452d-b448-411f790397a3' |

| status | BUILD |

| updated | 2019-01-22T13:22:21Z |

| user_id | b0f1fa1cbcef44cb8ae0958afb7d5de6 |

| volumes_attached | |

+-------------------------------------+-----------------------------------------------------+

(overcloud) [stack@lab-director ~]$ FLOATING_IP_ID=$( openstack floating ip list -f value -c ID --status 'DOWN' | head -n 1 )

(overcloud) [stack@lab-director ~]$ echo $FLOATING_IP_ID

44a9fbed-b4f7-4627-bd1e-b639e642e1da

(overcloud) [stack@lab-director ~]$ openstack server add floating ip instance0 $FLOATING_IP_ID

(overcloud) [stack@lab-director ~]$ openstack server list

+--------------------------------------+-----------+--------+-----------------------------------+------------+--------------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+-----------+--------+-----------------------------------+------------+--------------+

| 658c5862-fbea-4676-a848-2a7338ba506a | instance0 | ACTIVE | internal0=172.31.0.4, 172.16.0.26 | rhel76vgpu | m1.small-gpu |

+--------------------------------------+-----------+--------+-----------------------------------+------------+--------------+

(overcloud) [stack@lab-director ~]$ ping 172.16.0.26

PING 172.16.0.26 (172.16.0.26) 56(84) bytes of data.

64 bytes from 172.16.0.26: icmp_seq=9 ttl=62 time=0.755 ms

64 bytes from 172.16.0.26: icmp_seq=10 ttl=62 time=0.667 ms

64 bytes from 172.16.0.26: icmp_seq=11 ttl=62 time=0.730 ms

(overcloud) [stack@lab-director ~]$ ssh cloud-user@172.16.0.26

The authenticity of host '172.16.0.26 (172.16.0.26)' can't be established.

ECDSA key fingerprint is SHA256:LJSDFi3ktg0somXrzyXm68zxbx44Szf8bdt1X486gmY.

ECDSA key fingerprint is MD5:48:d5:3b:be:82:f2:c9:e1:4f:fb:b6:52:0c:44:54:ae.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '172.16.0.26' (ECDSA) to the list of known hosts.

[cloud-user@instance0 ~]$ nvidia-smi

Tue Jan 22 08:30:44 2019

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 410.71 Driver Version: 410.71 CUDA Version: 10.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 GRID M10-4Q On | 00000000:00:05.0 Off | N/A |

| N/A N/A P8 N/A / N/A | 272MiB / 4096MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

Check nvidia-gridd service:

[cloud-user@instance0 ~]$ sudo systemctl status nvidia-gridd.service

● nvidia-gridd.service - NVIDIA Grid Daemon

Loaded: loaded (/usr/lib/systemd/system/nvidia-gridd.service; enabled; vendor preset: disabled)

Active: active (running) since Tue 2019-01-22 08:29:35 EST; 2min 27s ago

Process: 18377 ExecStart=/usr/bin/nvidia-gridd (code=exited, status=0/SUCCESS)

Main PID: 18378 (nvidia-gridd)

CGroup: /system.slice/nvidia-gridd.service

└─18378 /usr/bin/nvidia-gridd

Jan 22 08:29:35 instance0 systemd[1]: Starting NVIDIA Grid Daemon...

Jan 22 08:29:35 instance0 systemd[1]: Started NVIDIA Grid Daemon.

Jan 22 08:29:35 instance0 nvidia-gridd[18378]: Started (18378)

Jan 22 08:29:35 instance0 nvidia-gridd[18378]: Ignore Service Provider Licensing.

Jan 22 08:29:35 instance0 nvidia-gridd[18378]: Calling load_byte_array(tra)

Jan 22 08:29:36 instance0 nvidia-gridd[18378]: Acquiring license for GRID vGPU Edition.

Jan 22 08:29:36 instance0 nvidia-gridd[18378]: Calling load_byte_array(tra)

Jan 22 08:29:39 instance0 nvidia-gridd[18378]: License acquired successfully. (Info: http://dhcp158-15.virt.lab.eng.bos.redhat.com:7070/request; GRID...l-WS,2.0)

Hint: Some lines were ellipsized, use -l to show in full.

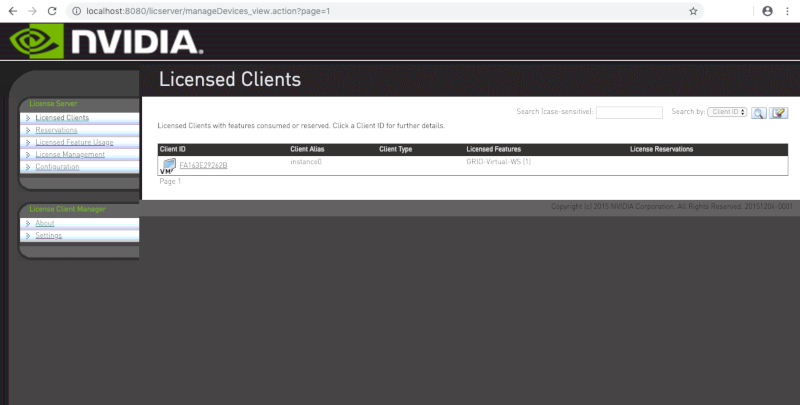

Check the NVIDIA Licence server after the instance launch:

Appendix: How to get the NVDIA grid rpm packages

To enable NVIDIA GRID you will need: